Cops Detection and Tracking

2020

This project started in 2020.

I constructed this project for academic and experimental purposes, I set the goal of being able to detect and classify a worker by uniform and other available data. I chose to do this with law enforcement personals for the convenience of gathering data.

The construction of this project included: - Utilizing different object detection models trained on the COCO dataset to gather new data and classify it

- Building and using a simple C# program for fast labelling

- Building a large labelled dataset of more than 3500 images in less than 3 hours

- Designing, modifying and training various CNNs for classification on this dataset

- Integration of tracking and filtering algorithms to the detection and classification processes

- Improving the end classification result of a person by using previous data gathered on that person

I tested the program on different online videos and on a remote camera feed that was set up on a Raspberry Pi. I got very good results on the limited data I managed to test on, but there is much room for improvement.

Future improvements ideas: - Try a combination of RNNs and unsupervised learning methods to the statistical data for more accurate classification

- Use GANs to extend the dataset and improve the CNN classification model

- Add a version in TF Lite for the Raspberry Pi

- Use multiple feeds and combine the data

Cops Detection and Tracking - Pipeline Overview

This project inspired the TrackEverything package and now has an example using it in this repository. You can find an old part of this project in one of my repositories here, this repository only contains the small implementation part for webcams and not the whole project.

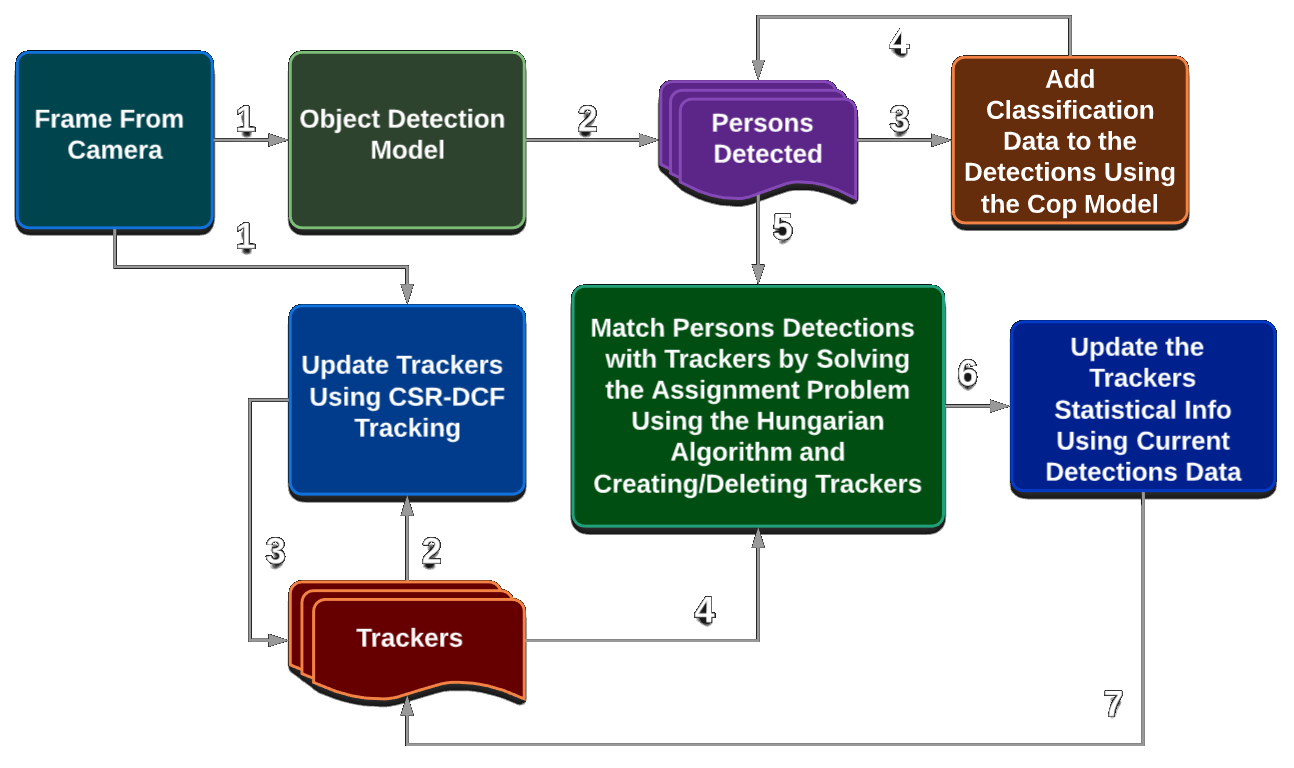

The Pipeline

The pipeline starts by receiving a series of images (frames) and outputs a list of tracker objects that contains the persons detected and the probability of them being a cop.

Breaking it Down to 4 Steps

1st Step - Get All Detections in Current Frame

First, we take the frame and passe it through an object detection model, I use the base of this Object Detection repository (I modified the version for TF1 since the TF2 version only came out 10 days ago). This model is trained on the COCO dataset which detects around 90 different objects, I tried some models with different architectures like the Faster RCNN InceptionV2 and the MobileNetV2. I used the model to give me all the persons detected in a frame. Later I filter out redundant overlapping detections using the Non-maximum Suppression (NMS) method.

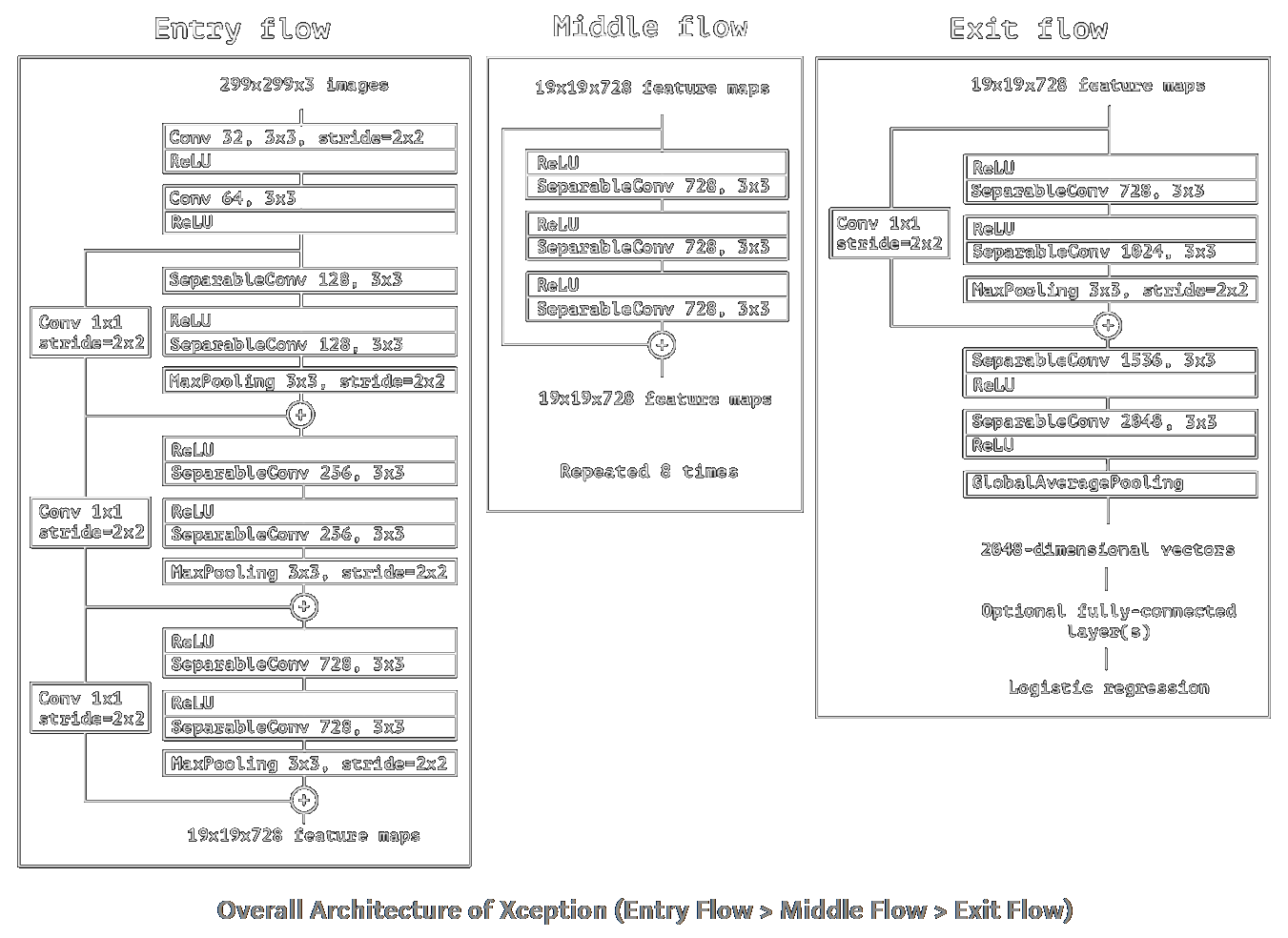

2nd Step - Get Classification Probabilities for the Detected Persons

After we have the persons from step 1, we put them through a classification model to determine the probability of them being a cop. I used the Xception CNN architecture with some added layers to train this model, I used this architecture for its low parameters count since my GPU’s capacity is limited.

Then, we create our Detections object list and which contains the positions boxes and the classification data.

3rd Step - Updated the Trackers Object List

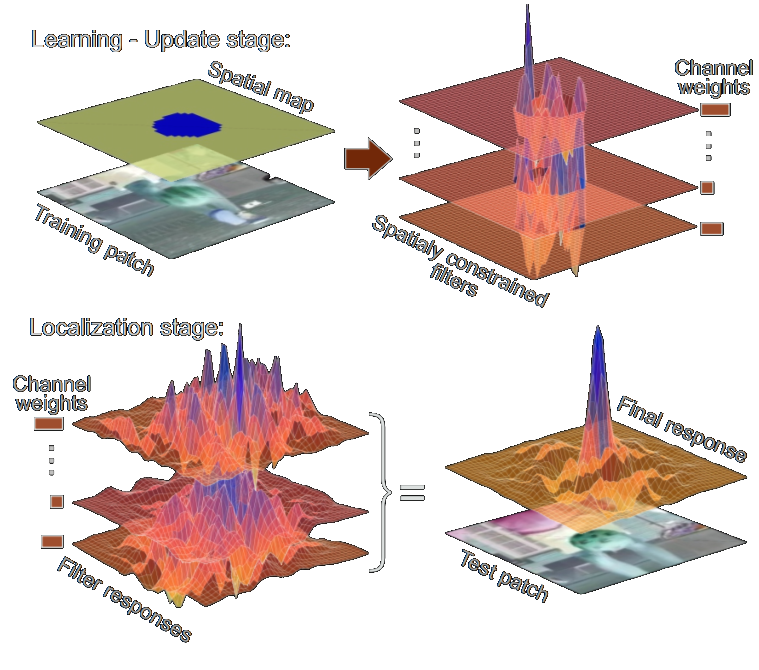

We have a list of Trackers object which is a class that contains an OpenCV CSRT tracker (A Discriminative Correlation Filter Tracker with Channel and Spatial Reliability).

Overview of the CSR-DCF approach. An automatically estimated spatial reliability map restricts the correlation filter to the parts suitable for tracking (top) improving localization within a larger search region and performance for irregularly shaped objects. Channel reliability weights calculated in the constrained optimization

step of the correlation filter learning reduce the noise of the weight-averaged filter response (bottom).

My tracker class also contains a unique ID, previous statistics about this ID and indicators for the accuracy of this tracker. In the first frame, this Trackers list is empty and then in step 4, it’s being filled with new trackers matching the detected objects. If the Trackers list is not empty, in this step we update the trackers positions using the current frame and dispose of failed trackers.

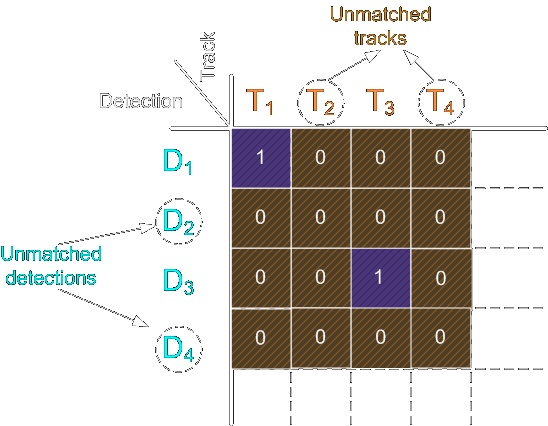

4th Step - Matching Detection with Trackers

Using intersection over union (IOU) of a tracker bounding box and detection bounding box as a metric. We solve the linear sum assignment problem (also known as minimum weight matching in bipartite graphs) for the IOU matrix using the Hungarian algorithm (also known as Munkres algorithm). The machine learning package scipy has a build-in utility function that implements the Hungarian algorithm.

matched_idx = linear_sum_assignment(-IOU_mat)

The linear_sum_assignment function by default minimizes the cost, so we need to reverse the sign of IOU matrix for maximization.

The result will look like this:

For each unmatched detector, we create a new tracker with the detector’s data, for the unmatched trackers we update the accuracy indicators for the tracker and remove any that are way off. For the matched ones, we update the tracker position to the more accurate detection box, we get the class data and average it with the previous 15 data points of the tracker.

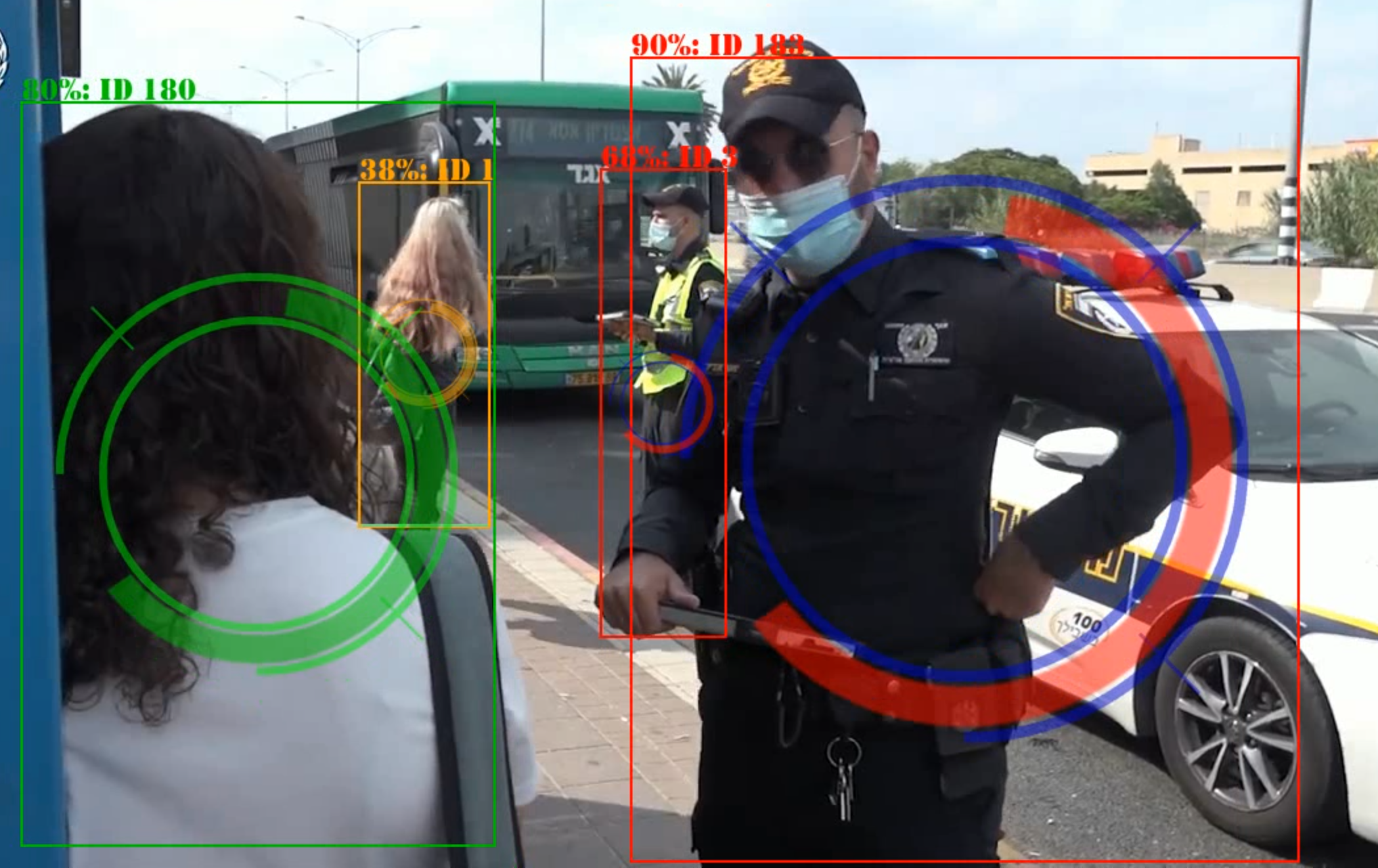

5th Step - Decide What to Do

After step 4 the Trackers list is up to date with all the statistical and current data. The tracker class has a method to return the current classifications and confidence of those scores, we then update the detectors and iterate through them. A detector with low confidence score probably came from a tracker with not enough data or the detection is poor, we mark those in orange. A detector with a high enough confidence score will be green if it’s not a cop, and red/blue if it is. The unmatched trackers that are not dead will show in cyan.

- Programmed in Python.

- Over 2.8K lines of code.This figure may include comment lines and some modified library files.

-

TensorFlow,

OpenCV,

NumPy,

Pandas,

Pillow,

Requests,

SciPy & MultiProcessing used in Python.

| Lang/Lib/Pro | Version |

|---|---|

| Python | 3.8.1 |

| TensorFlow | 2.2.0 |

| OpenCV | 4.2.0.34 |

| Jupyter | 1.0.0 |

| Matplotlib | 3.2.1 |

| NumPy | 1.18.4 |

| Pandas | 1.0.4 |

| Pillow | 7.1.2 |

| Requests | 2.23.0 |

| SciPy | 1.4.1 |

| TF-Slim | 1.1.0 |

| MultiProcessing | in Python 3.8 |

| Type | Python Scripts & Jupyter Notebooks |

| Input | Camera Feed |

| Output | Persons in Frame Classification |

| Special Components | Camera |

Example

Example

Example

Example

Example

Example

Example

Example